He seems like a great consumer advocate and repair activist to me.

He seems like a great consumer advocate and repair activist to me.

Haven’t gone more than 24 hours, if I had to guess maybe 20 hours.

So glad people are dipping out of plex.

I thought he was talking about amnesia the dark descent and the alien game combined, had no clue that game was a thing.

macvtap

This looks like some type of bridge mode, which I don’t want. I want the vm to be isolated except for the jellyfin ports that are forwarded. I think nat mode and forwarding is the best if not only way to achieve this.

Well then your forwarding hook is broken and won’t work for the second VM.

Because of the lack of clarity, I assume you meant something was wrong with the elif statement, so I ditched that.

/sbin/iptables -t nat -I PREROUTING -p tcp --dport $HOST_PORT2 -j DNAT --to $GUEST_IP:$GUEST_PORT2

fi

fi

if [ "${1}" = "Nginx" ]; then

My goal is to isolate Jellyfin and nginx from my seeing network. I’m not following any guide that wasn’t linked in the post.

I want the VM so my system is more modular and secure.

Sorry! The ip was wrong, the nginx vm is 192.168.101.85. edited

We’re all gonna need to use whonix for basic shit now

I ended up just installing Alma Linux again. Thank you very much for your help.

DO NOT follow my lead, my backup solution is scuffed at best.

I have:

I’ve got a hard drive and flash memory?

Don’t have this at all, the closest is that my phone is off-site half of the day.

yes, the host is 192.168.86.73 and it has that dnat rule.

And from the guest

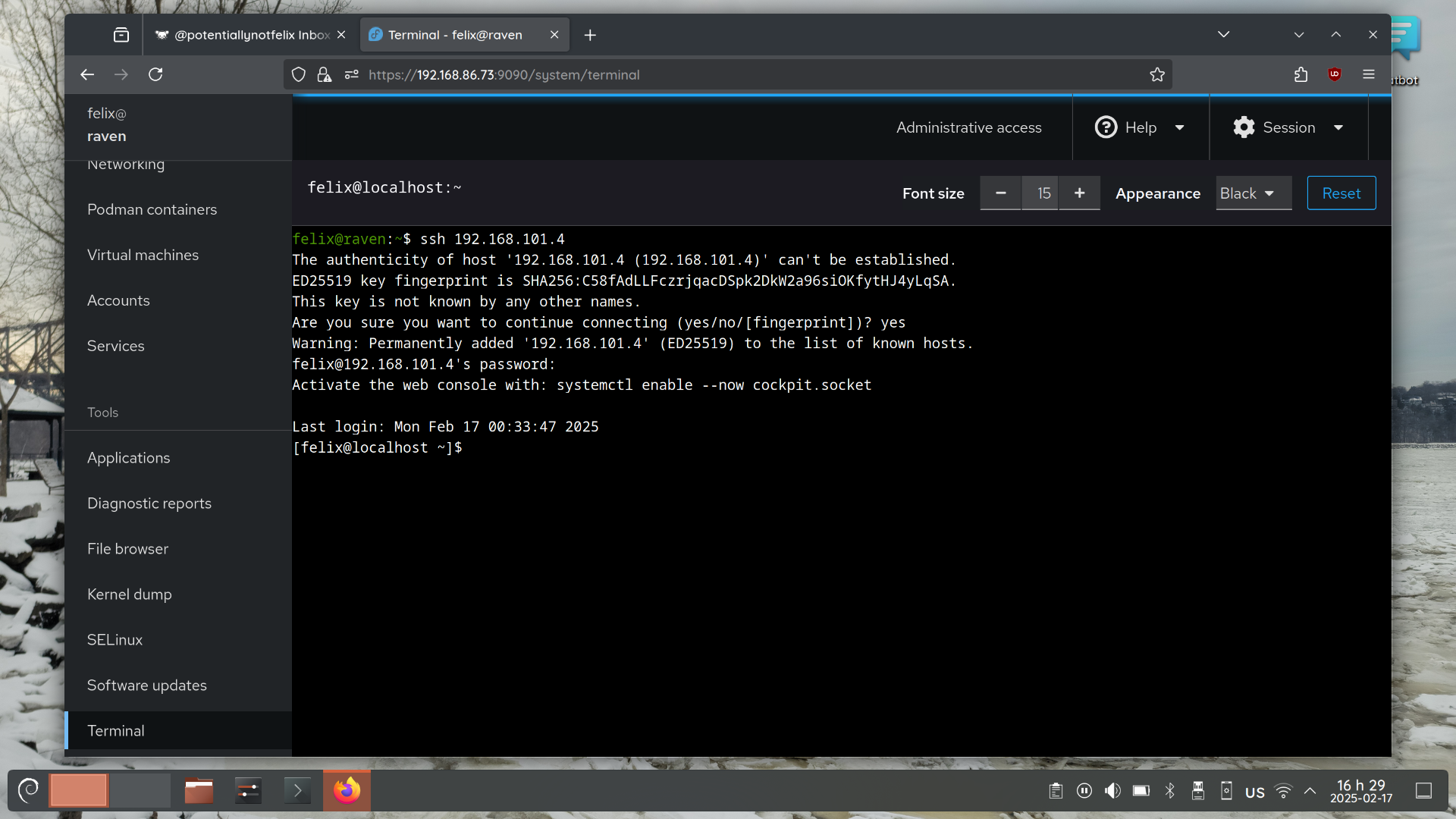

Assuming you meant from the host, I am sshing directly to 192.168.101.4 instead of to 192.168.86.73:2222.

The third paragraph doesn’t make sense to me. I am using port 22 on my host(192.168.86.73) for it’s own ssh.

tcpdump returns this when I ssh to port 2222:

20:32:29.957942 IP (tos 0x10, ttl 64, id 28091, offset 0, flags [DF], proto TCP (6), length 60)

192.168.86.23.53434 > 192.168.86.73.2222: Flags [S], cksum 0x5d75 (correct), seq 1900319834, win 64240, options [mss 1460,sackOK,TS val 3627223725 ecr 0,nop,wscale 7], length 0

192.168.101.4 is the alma guest. It’s got port 22 open and I can ssh into it from the host computer.

iptables -nvL on Alma returns:

Chain INPUT (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

Chain FORWARD (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

Chain OUTPUT (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

I believe this means it automatically accepts connections.

IMO this makes it unlikely that the guest is the issue.

twitter is fucked and requires a login for everything, but reddit seems to work fine without a login.

ssh -v returns:

OpenSSH_9.2p1 Debian-2+deb12u4, OpenSSL 3.0.15 3 Sep 2024

debug1: Reading configuration data /etc/ssh/ssh_config

debug1: /etc/ssh/ssh_config line 19: include /etc/ssh/ssh_config.d/*.conf matched no files

debug1: /etc/ssh/ssh_config line 21: Applying options for *

debug1: Connecting to 192.168.86.73 [192.168.86.73] port 2222.

debug1: connect to address 192.168.86.73 port 2222: Connection refused

ssh: connect to host 192.168.86.73 port 2222: Connection refused

No firewalls on the client, but iptables on host and guest. guest has no rules just allow all, and host rules are listed in the post.

sysctl net.ipv4.ip_forward returns:

net.ipv4.ip_forward = 1

So I’m pretty sure that this is already enabled. Thanks for your answer!

From the iptables manpage:

--to offset

Set the offset from which it starts looking for any matching. If not passed, default is the packet size.

...

--to-destination ipaddr-ipaddr

Address range to round-robin over.

This seems to do something, but the port still appears as closed.

iptables -nvL returns:

Chain INPUT (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

Chain FORWARD (policy ACCEPT 369 packets, 54387 bytes)

pkts bytes target prot opt in out source destination

5 300 ACCEPT 6 -- * virbr1 0.0.0.0/0 192.168.101.4 tcp dpt:22

84 6689 ACCEPT 0 -- * br-392a16e9359d 0.0.0.0/0 0.0.0.0/0 ctstate RELATED,ESTABLISHED

7 418 DOCKER 0 -- * br-392a16e9359d 0.0.0.0/0 0.0.0.0/0

146 9410 ACCEPT 0 -- br-392a16e9359d !br-392a16e9359d 0.0.0.0/0 0.0.0.0/0

0 0 ACCEPT 0 -- br-392a16e9359d br-392a16e9359d 0.0.0.0/0 0.0.0.0/0

Chain OUTPUT (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

I’ve omitted some listings that were labelled as docker.

iptables -t nat -nvL returns:

Chain PREROUTING (policy ACCEPT 626 packets, 90758 bytes)

pkts bytes target prot opt in out source destination

5 300 DNAT 6 -- * * 0.0.0.0/0 0.0.0.0/0 tcp dpt:2222 to:192.168.101.4:22

Chain INPUT (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

Chain OUTPUT (policy ACCEPT 154 packets, 12278 bytes)

pkts bytes target prot opt in out source destination

0 0 DOCKER 0 -- * * 0.0.0.0/0 !127.0.0.0/8 ADDRTYPE match dst-type LOCAL

Chain POSTROUTING (policy ACCEPT 290 packets, 22404 bytes)

pkts bytes target prot opt in out source destination

0 0 MASQUERADE 0 -- * !br-392a16e9359d 172.18.0.0/16 0.0.0.0/0

I’ve also omitted some listings that were labelled as docker.

After running the ssh command, the bytes seem to increase.

After 1 ssh attempt:

7 420 DNAT 6 -- * * 0.0.0.0/0 0.0.0.0/0 tcp dpt:2222 to:192.168.101.4:22

After another ssh attempt:

8 480 DNAT 6 -- * * 0.0.0.0/0 0.0.0.0/0 tcp dpt:2222 to:192.168.101.4:22

No I tried to find the element before he shared this.

1984